Big Data

Services You Can Depend On

Open-source frameworks for Big Data

The current state of the art open-source frameworks for Big Data and our value-added approach to get you all the way to the promised land of Big Data.

In the beginning, Hadoop was simply about batch processing and the distributed file system. However, rapid developments in technology have brought us to the much talked about Lambda Architecture.

There is no silver bullet even in the Big Data world with all its advantages over traditional Data Management systems like OLTP (Online Transaction Processing), Data Warehouses running Online Analytical Processing, and object and relational models. More on that shortly.

We have arrived today at a place where the Hadoop ecosystem makes it possible to handle unstructured and structured data in a single system. Also, it has the led to capabilities of stored data on a scale not seen before, making archiving less relevant. Today your archive can be online and accessible almost instantly allowing you to do analysis on historical data as well as live data in near real-time. The bleeding edge of open source is the Lambda Architecture that combines the batch processing approach with a speed and services layer. We are witnessing a convergence of approaches borrowed from SOA, Batch, and traditional data management systems. Let’s look at this in some detail.

Lambda Architecture

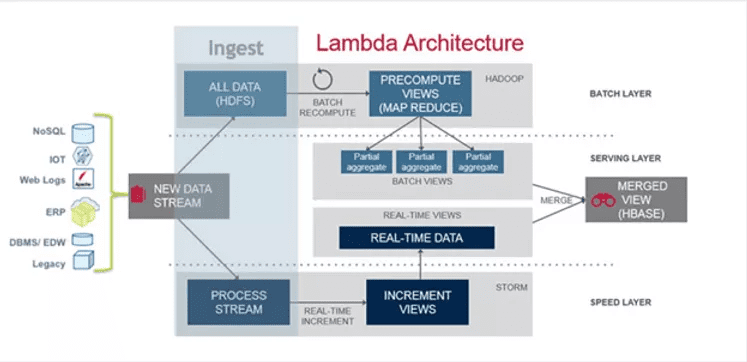

The diagram represents the Lambda Architecture.

With the Lambda Architecture, we have almost arrived at the promised land of Big Data with one glaring issue. Data with this architecture is immutable, so how do we update the data when value changes? Well, that’s solved by maintaining timestamps on all data so when value changes in the batch layer, a new event is stored with the most recent data. Naturally, the next question is if or not the history of all previous values is necessary or even relevant. It is not, and we couldn’t keep data forever anyway. Retention policies are necessary to delete versions of the data, but more so for privacy concerns and legal requirements. We can provide expertise in creating the data governance program necessary for applying these policies.

Ingest

Ingest is a cross-cutting concern. Data flows and is ingest along two major paths

- ALL Data (HDFS) this path stores data in the HDFS system for batch processing later.

- Process Stream. This data can be ingested and analyzed in flight with tools based on Apache Spark, and Hive and Impala on spark. In fact, Spark is quickly replacing Hadoop as the approach of choice of big data processing.

Batch

Batch: The batch layer stores all the data with no constraint on the schema. The schema-on-read is built in the batch views in the serving layer. Creating schema-on-read views requires algorithms to parse the data from the batch layer and convert them in a readable way. The advantage is that data freely evolve as there is no constraint on its structure. You are however responsible to ensure the data view consistency is maintained by your algorithms that build the views. The iterative nature of processing common in big data, therefore, requires the building of processing pipelines so that during an iteration returning to an earlier state can be done easily without much rework. We offer this as a value add to our clients.

Speed

The batch layer has an obvious latency issue. This layer solves that issue by receiving and processing the most recent data currently arriving into your cluster that has not already been stored in the batch layer. Thus, it provides a more complete and accurate view of data to the second.

Service

The service layer uses both the batch and speed layers to get the most complete accurate view of the data to extract the business value.

Getting Your Organization To The Next Level

We recognized the state of affairs in Big Data pretty good, even revolutionary, but it is not perfect. We also recognize that although we do have skilled staff, some endeavors are better left to the commercial product experts. We also recognize sometimes going for an existing commercially available product is the cheaper alternative and we are not shy about that.

We looked at all the available distributions and solutions to the problem, and we were pretty excited by Cloudera’s approach with their distribution. In fact, it is pretty awesome. We choose to partner with Cloudera and offer services around their product to our customers who require or want to go that extra mile to archive the elusive Big Data Nirvana.

We Choose Cloudera

We partnered with Cloudera because they are the de-facto Big Data leaders. Their product has the best integrated stack and smooth consistent user interface. A lot of the services we seek to build are already built into the products and are not an added-on afterthought. They quite simply just blow away the competition. Our customers do not have to use Cloudera or any commercial product. We can service the free open source solutions, but we highly recommend you give Cloudera a try.

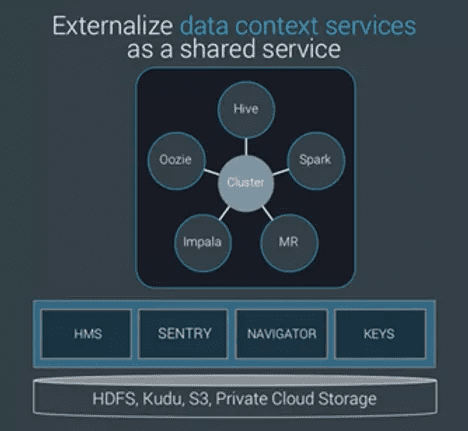

The ease of use is not the only advantage. We like how Cloudera has solved the Lambda Architecture’s weakness by externalizing the data context.

Data Context

The diagram shows the externalized data context and support for on-premises and in the cloud workloads.

Data Context: Cloudera stores the data context service in the cluster and not alongside the data. This is then exposed as a shared service with the following advantages

-

Common schemas (Hive Meta Store), access permissions (sentry and Kerberos), governance and classifications across all workloads on premises and in the cloud.

-

Lower running costs: Less hardware and software to manage.

-

Better productivity: Data is presented consistently across clusters without efforts on your part to do the presentation. Your developers can concentrate on solving your business problem not managing the infrastructure.

-

Flexibility to deploy to the cloud or on premises and move the workloads easily between the two. A cloud based deployment such as Amazon EMR may not always be the cheapest to option. Wouldn’t you rather have the option to choose based on actual costs?

-

Faster expansion: The administrators do not have to recreate the data context with every new cluster you add. This goes right to the bottom-line. Besides, it’s less prone to human error.

Conclusion

Whatever your choice, we are aware and able to help you implement the industry best practices. When you are ready to scale, move to the cloud, or take that next step and use Cloudera, our staff will be there to advise and support you. We put your best interests first and give you a list of options as well as the pros and cons of each choice you make. We think our partnership with Cloudera puts an industry leader behind us and strengthens what we can offer.